September 2013

This past spring I was in a data mining class and I spent some time working with the IMDB database. It is freely available, and there are some nifty tools for working with it. The goal of my data mining project was to develop a decision tree or a rule based classifier that could be used to predict a movies average user rating based on its other attributes. So ideally, we would have a decision tree that said something like “if a movie is made by Quentin Tarantino and stars Brad Pitt, then it will have an average user rating of 9/10.” Well the decision tree turned out to be quite a bit more complicated and less insightful than we were hoping, but in the process of building it we did come across some interesting findings that this post is intended to summarize.

Note: the data set was filtered to only include movies that meet the following constraints:

When building a rule-based classifier, rules similar to this kept showing up:

if genre = 'Drama' then user_rating > 7

if genre = 'Horror' then user_rating < 5This got us thinking that perhaps movies of certain genres just tend to be better. The following table seems to support that idea.

| Genre | Average User Rating |

|---|---|

| Documentary | 6.3 |

| Animation | 6.0 |

| Western | 5.9 |

| Romance | 5.9 |

| Drama | 5.7 |

| Crime | 5.7 |

| Mystery | 5.7 |

| Adventure | 5.6 |

| Family | 5.5 |

| Comedy | 5.5 |

| Fantasy | 5.4 |

| Thriller | 5.1 |

| Action | 5.1 |

| Sci-Fi | 4.9 |

| Horror | 4.4 |

So it looks like either there are a lot of crappy horror movies out there, or people just don’t like horror movies as much as they like documentaries. It also so happens that documentaries are on average the cheapest genre of movie to produce, as the chart below shows.

Animation movies are also very popular, but their high cost is prohibitive. They make up only 1% of the US movies in the IMDB database:

One of the data attributes that lent itself really well to data mining was the MPAA rating attribute. This attribute looked something like:

Rated R for strong violence, language and some sexual content/nudity.

The reason these ratings worked so well for data mining was because the MPAA likes to use certain keywords in their ratings: language, drugs, sex, violence, and nudity. So the rating above could be broken down into to a series of booleans indicating that the movie contained violence, language, sex and nudity, but didn’t contain any drugs. I was kinda expecting to find some dark statistic like “movies with violence and drugs tend to be rated higher than those without,” but it turned out that MPAA ratings weren’t a very good predictor of user ratings. Actually, the presence of any of those keywords had a slight negative correlation with the user rating attribute! Maybe there’s hope yet for humanity.

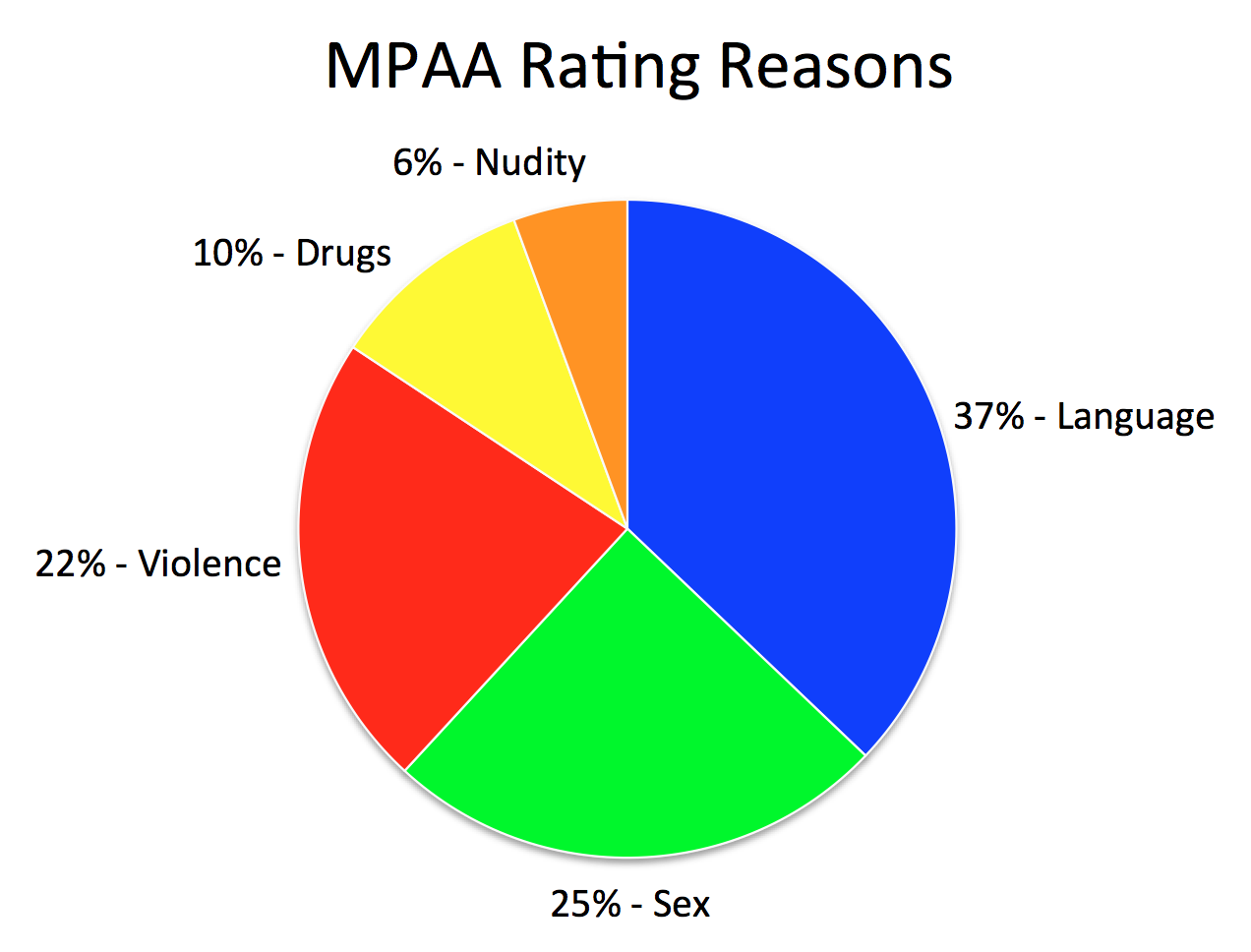

So the MPAA ratings weren’t very useful for predicting user ratings, but they are still useful for looking at what kind of obscenities are filling our movies:

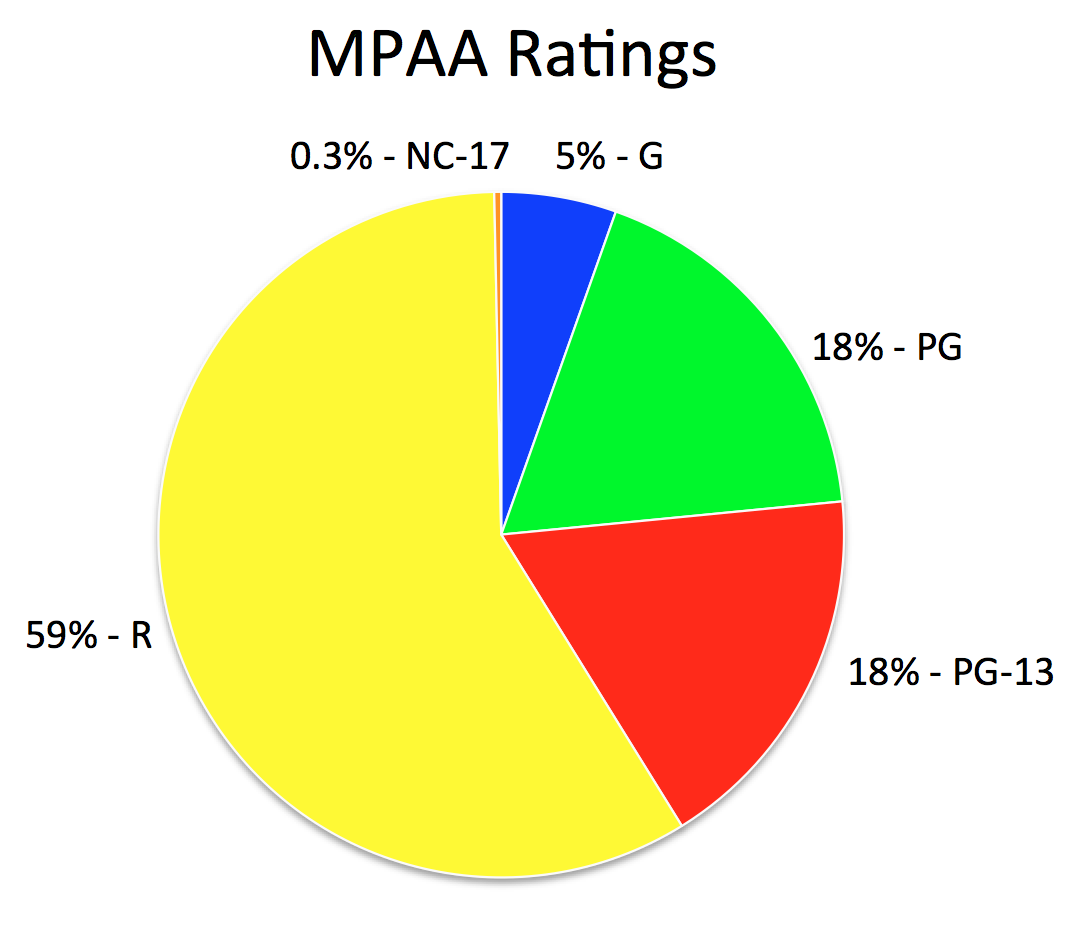

We can also look at rating distributions:

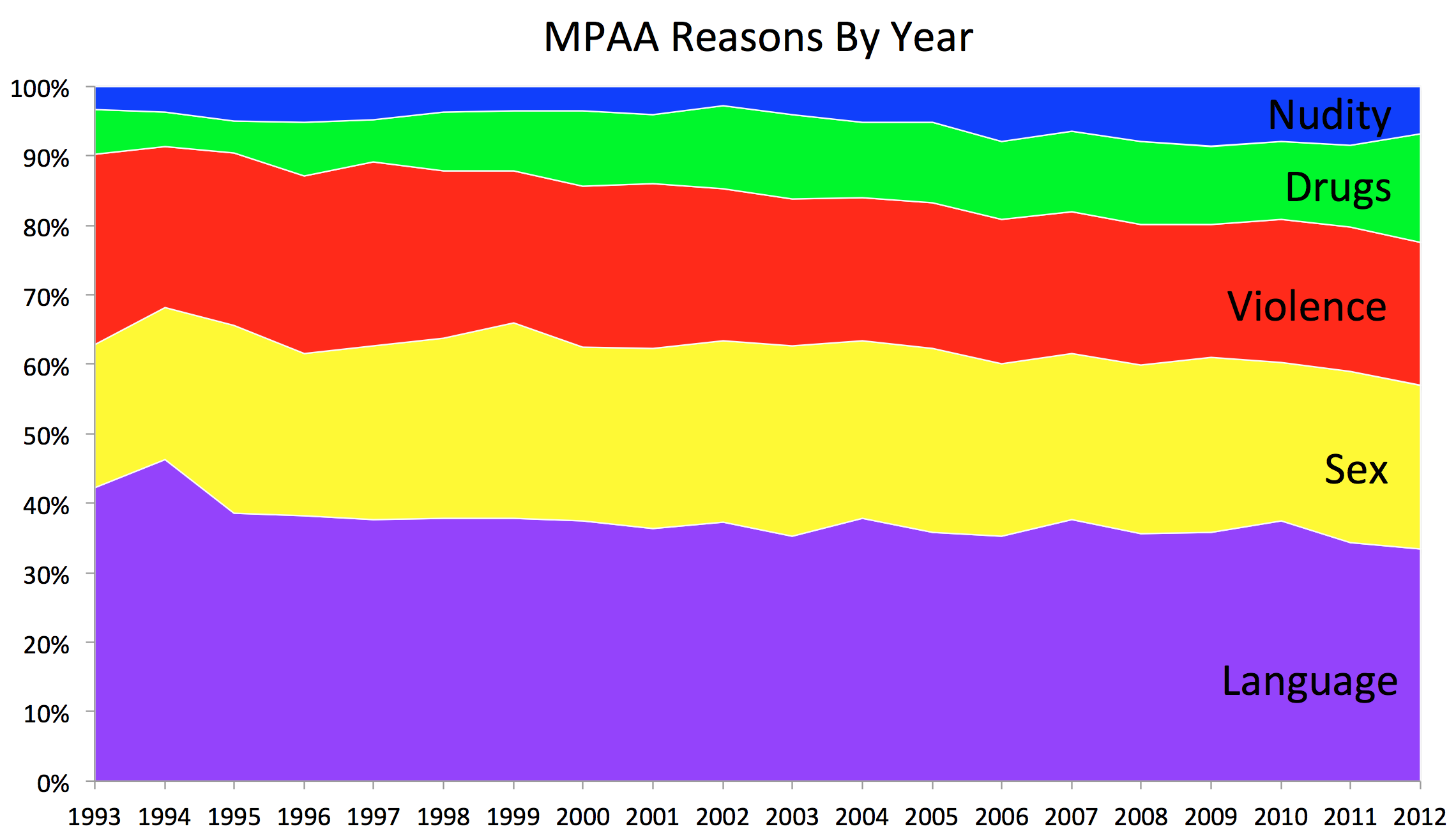

And how rating distributions have changed over the past 20 years:

I was surprised that the percentage of ‘R’ rated movies being produced has declined over the past 20 years. I wonder if the MPAA is just getting less sensitive or if Hollywood is really becoming more PG as the chart above suggests.

While MPAA ratings and genres are interesting to look at, the real predictors of how good a movie will be should be the people who made it. Here are some people who consistently make great movies:

Note: Only includes directors with at least 3 movies, and actors or actresses with at least 20 movies.

| Director | Average user rating | Number of movies |

|---|---|---|

| Nolan, Christopher | 8.41 | 7 |

| Unkrich, Lee | 8.13 | 4 |

| Darabont, Frank | 7.98 | 4 |

| Jackson, Peter | 7.94 | 7 |

| Kubrick, Stanley | 7.88 | 13 |

| Aronofsky, Darren | 7.86 | 5 |

| Tarantino, Quentin | 7.84 | 13 |

| Bird, Brad | 7.83 | 4 |

| Wright, Edgar | 7.78 | 4 |

| Vaughn, Matthew | 7.77 | 3 |

| Fincher, David | 7.76 | 9 |

| Affleck, Ben | 7.73 | 3 |

| Ritchie, Guy | 7.65 | 4 |

| Sharpsteen, Ben | 7.65 | 4 |

| Name | Average user rating | Number of movies |

|---|---|---|

| Hitchcock, Alfred | 7.61 | 26 |

| Chaplin, Charles | 7.33 | 30 |

| Serkis, Andy | 7.27 | 20 |

| Lloyd, Harold | 7.18 | 21 |

| Bale, Christian | 7.11 | 28 |

| DiCaprio, Leonardo | 7.10 | 27 |

| Parker, Trey | 7.07 | 35 |

| Eckhardt, Oliver | 7.00 | 25 |

| Rickman, Alan | 6.98 | 32 |

| Norton, Edward | 6.97 | 28 |

| Fishman, Duke | 6.97 | 20 |

| Howard, Art | 6.97 | 26 |

| Guinness, Alec | 6.95 | 22 |

| Goelz, Dave | 6.95 | 44 |

| Stone, Matt | 6.95 | 23 |

| Name | Average user rating | Number of movies |

|---|---|---|

| Bonham Carter, Helena | 7.17 | 26 |

| Swanson, Gloria | 7.08 | 29 |

| McGowan, Mickie | 7.06 | 29 |

| Blanchett, Cate | 7.06 | 29 |

| Bergman, Mary Kay | 7.06 | 20 |

| Lynn, Sherry | 7.03 | 32 |

| Cooper, Gladys | 6.98 | 30 |

| Harlow, Jean | 6.96 | 29 |

| Davies, Marion | 6.95 | 41 |

| Dietrich, Marlene | 6.95 | 33 |

| Hepburn, Audrey | 6.94 | 21 |

| Plowright, Hilda | 6.91 | 46 |

| Gale, Norah | 6.90 | 20 |

| Winslet, Kate | 6.89 | 21 |

| Michelson, Esther | 6.88 | 23 |

Well that gives a quick snapshot of some of our findings. It would be cool to be able to pinpoint the exact ingredients that make a great movie, but if that were possible then making movies would be more of a science than an art.